If you’ve ever been inside a professional studio, you know there’s something almost sacred about the control room.

Faders, knobs, glowing monitors—each dial adjusted with patience, each decision shaped by a producer’s ear.

But if you step into a studio in 2025, you may notice something new. It’s not a person hunched over the console.

It’s software running silently in the background, suggesting EQ tweaks or tightening compression automatically.

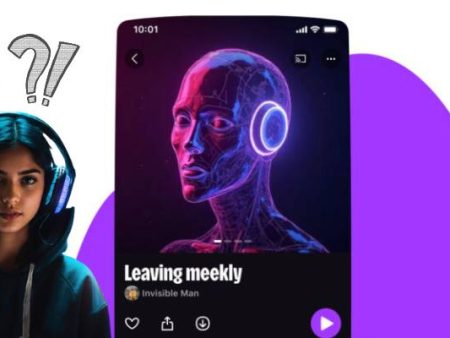

Welcome to the era of AI in the studio—where algorithms are no longer just toys for hobbyists.

They’re real collaborators in the mixing and mastering process. And whether you’re excited or skeptical, you can’t ignore the shift.

But here’s the question: is this a revolution, or just another tool? Are producers handing over the craft, or are they simply expanding their palette with algorithms that save time?

And underneath all the hype, what does this mean for the soul of music itself?

Why Mixing & Mastering Matter So Much

Before diving into AI, let’s pause. Why do mixing and mastering carry such weight in music?

Mixing is the art of balancing every element in a track—vocals, drums, guitars, synths—so they coexist without stepping on each other.

Mastering is the final polish: adjusting loudness, EQ, and dynamics to make the track sound great everywhere, from earbuds to stadium speakers.

In short, it’s the difference between a demo that feels flat and a record that feels alive.

Historically, this process required years of training, golden ears, and expensive gear. Now, algorithms are stepping in to democratize it.

From Knobs to Neural Networks: The Rise of AI Mixing

So how did we get here? AI entered the studio gradually. First with auto-tune, then with “smart” EQs and compressors that analyzed frequencies in real time.

Over the past decade, these tools evolved into full-scale platforms like LANDR, iZotope’s Ozone, and CloudBounce.

These systems use machine learning trained on thousands of professionally mastered tracks. By recognizing patterns—like how pop vocals are EQ’d differently from jazz vocals—they can suggest or even apply adjustments automatically.

In practice, it means you can upload a raw track, and within minutes, an AI will give you a mastered version.

It won’t always rival a Grammy-winning engineer, but it’s getting close enough that producers are paying attention.

The Practical Side: What AI Does Well

Let’s be fair: AI excels at certain aspects of mixing and mastering.

- Speed: What takes a human engineer hours, AI does in minutes.

- Consistency: No fatigue, no mood swings—settings are applied uniformly.

- Accessibility: Musicians without budgets can now produce radio-ready tracks.

- Pattern Recognition: Algorithms can detect and correct frequency clashes that even trained ears might miss.

For beginners, this is a game-changer. For pros, it’s like having an assistant who handles the boring stuff—so they can focus on creativity.

But Can AI Really Match Human Ears?

Here’s where the debate gets fiery. Music isn’t math, not entirely. A producer doesn’t just balance frequencies; they make artistic decisions.

Should the vocal feel intimate, almost whispering in your ear? Should the bass overwhelm, creating a sense of urgency?

AI can’t feel these intentions. It can approximate them based on patterns in its dataset. But the “soul” of a mix—the subtle choices that make Billie Eilish sound haunting or Kendrick Lamar sound explosive—still come from human instincts.

To me, this is where AI falls short. It’s excellent at stock corrections, but less so at emotional nuance.

Can AI Replace Stock Tools?

Here’s an honest question many producers ask: can AI replace stock plugins in digital audio workstations (DAWs)?

In some cases, yes. AI mastering services can outperform basic stock compressors or limiters. Why? Because they’re trained on higher-level data and can make complex adjustments automatically.

But stock tools still matter. They’re versatile, reliable, and in the hands of an experienced producer, they can outshine any AI preset. The future likely isn’t one or the other, but both—AI for speed, stock tools for control.

Real Stories: Producers Using AI Today

I spoke with a producer who works mostly in indie pop. She uses iZotope’s Neutron not to finish her mixes, but to guide her. “It’s like having a second opinion,” she told me.

“If the AI suggests lowering the midrange on the guitar, I listen closer. Sometimes I agree, sometimes I don’t. But it makes me more attentive.”

Another producer, focused on hip-hop, uses LANDR for quick masters of demo tracks. “Clients don’t want to wait days for a polished version,” he explained.

“AI masters give them something to vibe with right away. Then, if they want a final version, I still do it manually.”

That’s the pattern I keep seeing. AI isn’t replacing engineers—it’s reshaping workflows.

Beyond Mixing: Turning Lyrics Into Songs

It’s not just about mixing and mastering. Some platforms now handle turning lyrics into songs, pairing text with melodies and production automatically. Imagine writing a poem and hearing it sung back as a ballad.

For producers, this means AI isn’t just polishing audio—it’s generating raw material to work with. It’s the digital equivalent of a co-writer who’s always available, though not always inspired.

This is where things start to blur. If AI can generate lyrics, compose melodies, and mix the track, what’s left for humans?

My opinion: everything that matters. We’re still the ones with stories, lived experiences, and cultural contexts that machines can’t replicate.

The Expansion into Streaming

One of the biggest shifts is the rise of ai-generated music in streaming. Platforms like Spotify have quietly filled playlists with machine-made background tracks.

These songs may not top charts, but they rack up millions of plays in genres like “Focus” or “Sleep.”

For producers, this creates a new challenge. If algorithms can churn out endless lo-fi beats or ambient playlists, where does that leave human producers trying to carve out a niche?

On the flip side, it also creates opportunities: AI can handle filler content, freeing humans to focus on artistry.

The question isn’t whether AI music belongs in streaming—it’s how much space it will take up, and whether platforms will be transparent about it.

The Tools Producers Are Using Right Now

Here are some of the most common ai music apps that producers are experimenting with for mixing and mastering:

- LANDR: Cloud-based mastering with distribution options.

- iZotope Ozone & Neutron: Industry-standard AI-assisted plugins for mastering and mixing.

- CloudBounce: Fast, affordable mastering alternative.

- Sonible smart:EQ & smart:comp: Intelligent EQ and compression.

- Masterchannel: Newer platform offering quick AI masters with customizable settings.

These aren’t gimmicks anymore. They’re becoming standard parts of the workflow.

The Limitations Nobody Likes to Talk About

Despite the excitement, we need to be honest about limitations.

- Homogenization: AI tends to produce safe, predictable results. Great for background music, less so for innovation.

- Overreliance: Young producers risk skipping the fundamentals, trusting the algorithm too much.

- Cultural Blind Spots: AI trained on Western datasets may misinterpret or flatten non-Western music traditions.

- Legal Gray Areas: If AI masters your track, who owns the intellectual property? The debate isn’t settled.

These aren’t small concerns. They’re growing pains in a new era.

My Personal Take: A Double-Edged Sword

I’ll be honest: the first time I used AI mastering, I felt conflicted. It was fast, clean, and—if I’m being brutally truthful—better than what I could have done at the time.

But it also felt empty. Like skipping the part of the journey that makes you sweat and struggle, the part that teaches you.

Over time, I’ve shifted my perspective. I now see AI as a safety net. A way to check my mixes, not to replace them.

I’d never hand over the entire process, because I love the messiness of human mixing. But I also won’t ignore the convenience.

That tension—admiration mixed with unease—is where I think most of us will live for the next decade.

The Bigger Picture: Redefining Creativity in the Studio

So where does this leave us? Here’s how I see it:

- For beginners: AI levels the playing field. You don’t need thousands of dollars to sound decent.

- For professionals: AI saves time and offers a second set of “ears.”

- For the industry: AI creates both opportunities (new revenue streams) and challenges (oversaturation, ethical debates).

And perhaps most importantly, AI is forcing us to ask what creativity really means. If the machine can do the technical heavy lifting, what’s left for us?

The answer is clear: the vision, the emotion, the imperfections that algorithms will never fully capture.

Conclusion: The Studio Isn’t Dying—It’s Evolving

The idea of AI in the studio isn’t about replacing producers. It’s about changing what their role looks like. Less time on repetitive EQ tasks, more time on storytelling. Less focus on rules, more on risks.

Mixing and mastering may be increasingly algorithmic, but music itself will always remain human at its core.

Because behind every plugin, every preset, every polished track, there’s still someone chasing a feeling—hoping it resonates with another person on the other side of the speakers.

That’s not something you can automate.